Recalibration without end?

Episode 8

Author: Hanna Nennewitz

Recalibration without end?

Episode 8

Author: Hanna Nennewitz

A rule-based system works very reliably under optimal inspection conditions and with constant parts. The situation is different if the parts come out of the machine slightly differently from batch to batch, but are still OK parts. Recalibration can then quickly become time-consuming and costly. It's a different story with AI-based systems like the Maddox AI system. This system can learn that parts can look different and still be OK parts.

In episode 4, Peter Droege told me about a customer who produces parts using the injection molding process. This customer got the parts inspected by a rule-based system and had a big problem with pseudo scrap, as the OK parts sometimes looked very different. As a result, the system had to be recalibrated very frequently. After installing the Maddox AI system, these problems no longer existed. I will discuss this recalibration issue in more depth in this blog post. In doing so, I explain both why high recalibration efforts can occur with rule-based systems, how AI deals with “data drifts”, and what recalibration looks like with AI-based systems. For this, I talk to David Rebhan, Hardware Engineer at Maddox AI, who explains the recalibration of rule-based systems in more detail. In addition, Alexander Böttcher, ML Engineer at Maddox AI, gives me more detailed information about recalibrating AI-based systems and how the process differs from rule-based systems.

In our conversation, David explains that rule-based systems are also very reliable inspection systems under perfect conditions: “If you use a rule-based system and it always tests the same parts under the same environmental conditions, then recalibration is rarely needed.” That’s because thresholds can’t be falsely breached if test conditions remain the same. I have already dealt with this in more detail in episode 2. According to David, the main reason why rule-based systems need to be recalibrated is that products change in some way. This can be due to production fluctuations, for example, which sometimes make parts a little lighter/darker or more/less glossy. “Often, in this case, you have to adjust certain parameters. This changing of parameters is done relatively quickly by someone who is familiar with the program. But afterwards, of course, you have to test whether the inspection works one hundred percent again.” And it’s at this point that you can get into a correction loop, because you have to keep adjusting the parameters and then test the system. Depending on the application, that can take a few days or sometimes weeks. However, how often the recalibration of rule-based systems has to be carried out and how time-consuming this is depends on the respective application. The more often this recalibration has to be carried out, the more expensive and, of course, the greater the effort. The customer Peter Droege told me about in episode 5 had to recalibrate his threshold values almost daily, which not only led to high costs but also to low acceptance of the original system. Consequently, it made sense to think about an alternative testing logic, in this case an AI-based testing system. But how does AI deal with new framework conditions, so-called “data drifts”?

Alexander explains to me in our conversation that an important basis for dealing with data drifts is working with pre-trained networks: “These networks have already been shown a great amount of annotated images. These are not just images of, for example, turned parts, but also of landscapes, houses, animals, food, and so on. This has the effect that the networks can already recognize corners and edges on their own.”

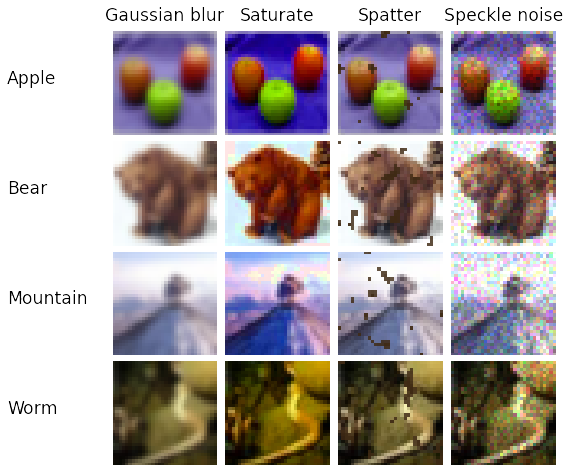

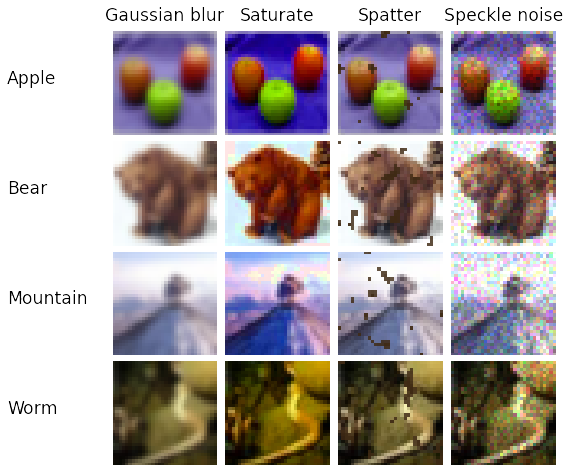

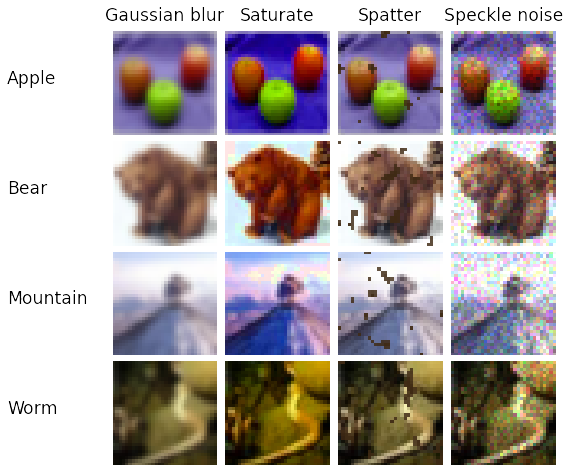

In addition, AI models are ideally already prepared for possible data drifts during training for the specific use case. One lever here is data augmentation. In data augmentation, possible image changes are taken into account during training. To make this procedure more understandable, Alexander shows me a graphic:

In our conversation, David explains that rule-based systems are also very reliable inspection systems under perfect conditions: “If you use a rule-based system and it always tests the same parts under the same environmental conditions, then recalibration is rarely needed.” That’s because thresholds can’t be falsely breached if test conditions remain the same. I have already dealt with this in more detail in episode 2. According to David, the main reason why rule-based systems need to be recalibrated is that products change in some way. This can be due to production fluctuations, for example, which sometimes make parts a little lighter/darker or more/less glossy. “Often, in this case, you have to adjust certain parameters. This changing of parameters is done relatively quickly by someone who is familiar with the program. But afterwards, of course, you have to test whether the inspection works one hundred percent again.” And it’s at this point that you can get into a correction loop, because you have to keep adjusting the parameters and then test the system. Depending on the application, that can take a few days or sometimes weeks. However, how often the recalibration of rule-based systems has to be carried out and how time-consuming this is depends on the respective application. The more often this recalibration has to be carried out, the more expensive and, of course, the greater the effort. The customer Peter Droege told me about in episode 5 had to recalibrate his threshold values almost daily, which not only led to high costs but also to low acceptance of the original system. Consequently, it made sense to think about an alternative testing logic, in this case an AI-based testing system. But how does AI deal with new framework conditions, so-called “data drifts”?

Alexander explains to me in our conversation that an important basis for dealing with data drifts is working with pre-trained networks: “These networks have already been shown a great amount of annotated images. These are not just images of, for example, turned parts, but also of landscapes, houses, animals, food, and so on. This has the effect that the networks can already recognize corners and edges on their own.”

In addition, AI models are ideally already prepared for possible data drifts during training for the specific use case. One lever here is data augmentation. In data augmentation, possible image changes are taken into account during training. To make this procedure more understandable, Alexander shows me a graphic:

In our conversation, David explains that rule-based systems are also very reliable inspection systems under perfect conditions: “If you use a rule-based system and it always tests the same parts under the same environmental conditions, then recalibration is rarely needed.” That’s because thresholds can’t be falsely breached if test conditions remain the same. I have already dealt with this in more detail in episode 2. According to David, the main reason why rule-based systems need to be recalibrated is that products change in some way. This can be due to production fluctuations, for example, which sometimes make parts a little lighter/darker or more/less glossy. “Often, in this case, you have to adjust certain parameters. This changing of parameters is done relatively quickly by someone who is familiar with the program. But afterwards, of course, you have to test whether the inspection works one hundred percent again.” And it’s at this point that you can get into a correction loop, because you have to keep adjusting the parameters and then test the system. Depending on the application, that can take a few days or sometimes weeks. However, how often the recalibration of rule-based systems has to be carried out and how time-consuming this is depends on the respective application. The more often this recalibration has to be carried out, the more expensive and, of course, the greater the effort. The customer Peter Droege told me about in episode 5 had to recalibrate his threshold values almost daily, which not only led to high costs but also to low acceptance of the original system. Consequently, it made sense to think about an alternative testing logic, in this case an AI-based testing system. But how does AI deal with new framework conditions, so-called “data drifts”?

Alexander explains to me in our conversation that an important basis for dealing with data drifts is working with pre-trained networks: “These networks have already been shown a great amount of annotated images. These are not just images of, for example, turned parts, but also of landscapes, houses, animals, food, and so on. This has the effect that the networks can already recognize corners and edges on their own.”

In addition, AI models are ideally already prepared for possible data drifts during training for the specific use case. One lever here is data augmentation. In data augmentation, possible image changes are taken into account during training. To make this procedure more understandable, Alexander shows me a graphic:

“On the graphic you can see that changes have been made to the images. The apples are shown blurrier, more saturated, speckled with spots and more pixelated. The model doesn’t just see natural images. The natural images are additionally modified by various so-called augmentation strategies to expand the training base,” Alexander explains. Dr. Wieland Brendel, co-founder of Maddox AI, has shown in various publications that training with augmented and stylized images leads to more robust AI models. In addition to many smaller technical levers (e.g., batchnorm), unsupervised learning on a large amount of unannotated data represents another relevant lever for addressing data drifts. Alexander explains, “Unsupervised learning means that the model is not given any instructions on what to learn. It is simply shown many different non-annotated images on which the model is expected to independently recognize patterns, relationships, and structures.” And last but not least, expanding the original database also helps to keep data drifts in mind during training.

This sounds rather abstract and complicated at first, but the simplified idea behind it is as follows: You try to show the model as many different scenarios as possible already in training, so that you virtually force the model to focus less on the changing framework conditions, such as the changing brightness of the parts, and instead concentrate on the relevant errors in the image. Therefore, the optimized AI model ultimately does not care whether the part under review is produced slightly darker or brighter. It has learned that these production fluctuations are normal. By way of comparison, you can imagine this as if you were working through a text. First, you read through the whole text to understand all the connections. However, you mark the most important parts. When you look at the text again later, you already know the connections. Therefore, one can then simply concentrate on the most important marked contents without being distracted by unimportant subordinate clauses. Thanks to the described levers, appropriately optimized AI-based systems react much more robustly to data drifts than rule-based systems. For example, completeness checks do not even need to be recalibrated with Maddox AI.

“On the graphic you can see that changes have been made to the images. The apples are shown blurrier, more saturated, speckled with spots and more pixelated. The model doesn’t just see natural images. The natural images are additionally modified by various so-called augmentation strategies to expand the training base,” Alexander explains. Dr. Wieland Brendel, co-founder of Maddox AI, has shown in various publications that training with augmented and stylized images leads to more robust AI models. In addition to many smaller technical levers (e.g., batchnorm), unsupervised learning on a large amount of unannotated data represents another relevant lever for addressing data drifts. Alexander explains, “Unsupervised learning means that the model is not given any instructions on what to learn. It is simply shown many different non-annotated images on which the model is expected to independently recognize patterns, relationships, and structures.” And last but not least, expanding the original database also helps to keep data drifts in mind during training.

This sounds rather abstract and complicated at first, but the simplified idea behind it is as follows: You try to show the model as many different scenarios as possible already in training, so that you virtually force the model to focus less on the changing framework conditions, such as the changing brightness of the parts, and instead concentrate on the relevant errors in the image. Therefore, the optimized AI model ultimately does not care whether the part under review is produced slightly darker or brighter. It has learned that these production fluctuations are normal. By way of comparison, you can imagine this as if you were working through a text. First, you read through the whole text to understand all the connections. However, you mark the most important parts. When you look at the text again later, you already know the connections. Therefore, one can then simply concentrate on the most important marked contents without being distracted by unimportant subordinate clauses. Thanks to the described levers, appropriately optimized AI-based systems react much more robustly to data drifts than rule-based systems. For example, completeness checks do not even need to be recalibrated with Maddox AI.

“On the graphic you can see that changes have been made to the images. The apples are shown blurrier, more saturated, speckled with spots and more pixelated. The model doesn’t just see natural images. The natural images are additionally modified by various so-called augmentation strategies to expand the training base,” Alexander explains. Dr. Wieland Brendel, co-founder of Maddox AI, has shown in various publications that training with augmented and stylized images leads to more robust AI models. In addition to many smaller technical levers (e.g., batchnorm), unsupervised learning on a large amount of unannotated data represents another relevant lever for addressing data drifts. Alexander explains, “Unsupervised learning means that the model is not given any instructions on what to learn. It is simply shown many different non-annotated images on which the model is expected to independently recognize patterns, relationships, and structures.” And last but not least, expanding the original database also helps to keep data drifts in mind during training.

This sounds rather abstract and complicated at first, but the simplified idea behind it is as follows: You try to show the model as many different scenarios as possible already in training, so that you virtually force the model to focus less on the changing framework conditions, such as the changing brightness of the parts, and instead concentrate on the relevant errors in the image. Therefore, the optimized AI model ultimately does not care whether the part under review is produced slightly darker or brighter. It has learned that these production fluctuations are normal. By way of comparison, you can imagine this as if you were working through a text. First, you read through the whole text to understand all the connections. However, you mark the most important parts. When you look at the text again later, you already know the connections. Therefore, one can then simply concentrate on the most important marked contents without being distracted by unimportant subordinate clauses. Thanks to the described levers, appropriately optimized AI-based systems react much more robustly to data drifts than rule-based systems. For example, completeness checks do not even need to be recalibrated with Maddox AI.

Nevertheless, there are cases where even AI-based systems need to be recalibrated or, more precisely, retrained. “In many of our projects, the customer’s internal definition of defects changes over time. At the beginning, for example, all types of scratches should be detected, while later it turns out that micro-scratches can be ignored. In such cases, we have to retrain the model,” Alexander explains. But what does the process of retraining look like in an AI-based system? Alexander explains, “The basis of our application is the database. During re-calibration, this needs to be updated. Typically, the annotations of the images are adapted to the new requirements. For example, if the system is rejecting defects that are too small, images in the database with similar characteristics must be stored as OK. This way, the model understands that it doesn’t need to reject parts with very small defects.” This post-training can be done by humans without an AI background, thanks to the high usability of the Maddox AI system, which I showed in episode 6. The only important conditions should be that the recalibrating person should be an expert for the defects, since they have to decide what should be counted as a defect and what should not.

All this leads me to the conclusion that rule-based systems can also reliably perform inspection tasks, but only for very simple use cases and under perfect inspection conditions. Once the use cases become more complex, AI-based systems provide a robust quality control solution. This is especially true since an AI-based system like Maddox AI learns over time that parts can be produced differently. This continuous learning allows me to reach a state where I don’t need to recalibrate at all, or more accurately, retrain. Rule-based systems, on the other hand, do not learn over time, so in the worst case scenario, I am trapped in a constant recalibration iteration.

If your team is also suffering from high recalibration efforts, feel free to contact us. Our team will be happy to explain how you too can minimize the recalibration effort of your visual inspection systems.

Nevertheless, there are cases where even AI-based systems need to be recalibrated or, more precisely, retrained. “In many of our projects, the customer’s internal definition of defects changes over time. At the beginning, for example, all types of scratches should be detected, while later it turns out that micro-scratches can be ignored. In such cases, we have to retrain the model,” Alexander explains. But what does the process of retraining look like in an AI-based system? Alexander explains, “The basis of our application is the database. During re-calibration, this needs to be updated. Typically, the annotations of the images are adapted to the new requirements. For example, if the system is rejecting defects that are too small, images in the database with similar characteristics must be stored as OK. This way, the model understands that it doesn’t need to reject parts with very small defects.” This post-training can be done by humans without an AI background, thanks to the high usability of the Maddox AI system, which I showed in episode 6. The only important conditions should be that the recalibrating person should be an expert for the defects, since they have to decide what should be counted as a defect and what should not.

All this leads me to the conclusion that rule-based systems can also reliably perform inspection tasks, but only for very simple use cases and under perfect inspection conditions. Once the use cases become more complex, AI-based systems provide a robust quality control solution. This is especially true since an AI-based system like Maddox AI learns over time that parts can be produced differently. This continuous learning allows me to reach a state where I don’t need to recalibrate at all, or more accurately, retrain. Rule-based systems, on the other hand, do not learn over time, so in the worst case scenario, I am trapped in a constant recalibration iteration.

If your team is also suffering from high recalibration efforts, feel free to contact us. Our team will be happy to explain how you too can minimize the recalibration effort of your visual inspection systems.

Nevertheless, there are cases where even AI-based systems need to be recalibrated or, more precisely, retrained. “In many of our projects, the customer’s internal definition of defects changes over time. At the beginning, for example, all types of scratches should be detected, while later it turns out that micro-scratches can be ignored. In such cases, we have to retrain the model,” Alexander explains. But what does the process of retraining look like in an AI-based system? Alexander explains, “The basis of our application is the database. During re-calibration, this needs to be updated. Typically, the annotations of the images are adapted to the new requirements. For example, if the system is rejecting defects that are too small, images in the database with similar characteristics must be stored as OK. This way, the model understands that it doesn’t need to reject parts with very small defects.” This post-training can be done by humans without an AI background, thanks to the high usability of the Maddox AI system, which I showed in episode 6. The only important conditions should be that the recalibrating person should be an expert for the defects, since they have to decide what should be counted as a defect and what should not.

All this leads me to the conclusion that rule-based systems can also reliably perform inspection tasks, but only for very simple use cases and under perfect inspection conditions. Once the use cases become more complex, AI-based systems provide a robust quality control solution. This is especially true since an AI-based system like Maddox AI learns over time that parts can be produced differently. This continuous learning allows me to reach a state where I don’t need to recalibrate at all, or more accurately, retrain. Rule-based systems, on the other hand, do not learn over time, so in the worst case scenario, I am trapped in a constant recalibration iteration.

If your team is also suffering from high recalibration efforts, feel free to contact us. Our team will be happy to explain how you too can minimize the recalibration effort of your visual inspection systems.